Week 1: Building an App to Replace Myself

I'm a sound designer, and I'm trying to build an app that will replace me. Follow along as I either succeed wildly or fail spectacularly - probably the latter.

The Problem That Started This

Every time I work on brand videos, I run into the same issue: I'm not a musician, but I need to find music that syncs perfectly with how the video is cut to accompany the sound design. When you watch a commercial and the beat hits right when the logo appears, that timing isn't accidental, and it's a pain in the ass to get right when you're working with pre-made music tracks and pre-made videos.

I've been using music generators and trying to stretch them to match video cuts, but it doesn't sound professional. It takes up a lot of time, and I'm awkwardly trying to force a 120 BPM track to a video that doesn't have a set rhythm to it. So I thought: what if I could upload a video and a music reference, and an app would generate new music that actually matches the video's rhythm?

Product

I asked on Reddit: Any tool/plugin that creates custom music matching your video's rhythm and cuts?

Mainly just trying to get to see what video editors think about this space. Seems they're happy and used to changing their video cuts to match the music. Or if anything getting a composer to create music specifically for their video. So creating like a "composer for video" app seems the thing that would be solving my own problem as well as others's.

Going Down the Wrong Rabbit Hole

My first instinct was to go big. Use existing music generation APIs like Mubert, describe what I want, generate music, then slice it up to match the video. This is a terrible idea for about five reasons, but the main one is that you end up destroying the musical structure that makes a song actually listenable.

Then I thought about building a "vault" of licensed music stems and having a script compose specific songs for videos. This would probably work amazingly well, but the licensing costs would bankrupt me before I even had a working prototype. Plus, I'm trying to build something in a month, not start a music licensing company.

Thinking Smaller

Instead of trying to solve the entire music-for-video problem, I focused on just percussion. When I really thought about it, drums were what I actually needed most of the time. The main issue with AI beat generators wasn't that they sounded bad, it's that they couldn't match the tempo and rhythm of my videos.

Drums are also way more forgiving than full music tracks. You can cut them up, stretch them, and rearrange them without destroying the musical integrity. A drum hit is a drum hit. And honestly, you can make drum sounds out of anything. There's this Google AI project that generates drum beats from random materials, which got me thinking about building something similar but specifically for video sync.

The Technical Bits

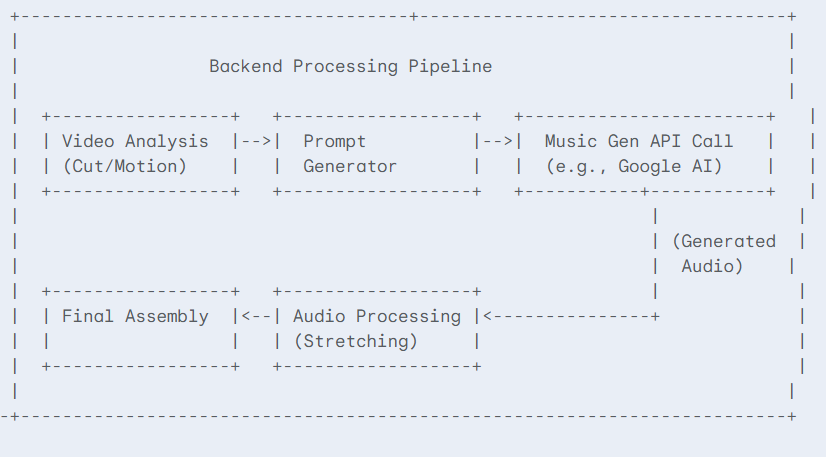

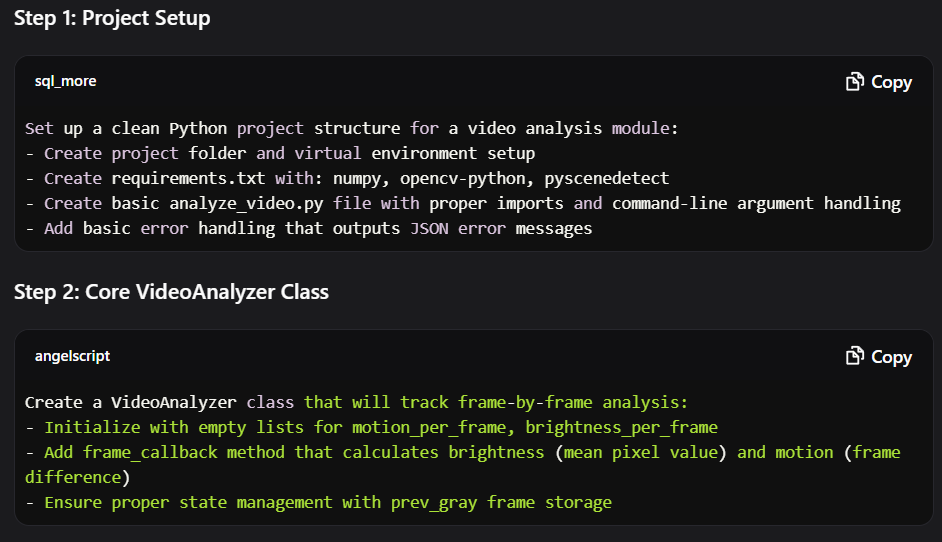

So I started building. My approach was to use AI to create the app structure and the prompts for Cursor (AI coding tool).

First, I had Gemini create a plan for the whole app structure. Then I asked it for a detailed breakdown of the video analysis part specifically. I took that plan to Claude and said "turn this into prompts I can feed to Cursor to actually build this thing."

Using Cursor I got some basic code working that analyses videos for energy levels and identifies where cuts happen.

Next step was connecting this to Soundraw's API. Soundraw lets you generate music and then tweak it - mute instruments, adjust tempo, change the mood. So I fed both my video analysis results and Soundraw's API docs back to Gemini, asking it to create a plan for generating the perfect prompt for Soundraw. Then back to Claude to turn that plan into more Cursor prompts.

The chain was then: Video analysis → Soundraw prompt creation → Soundraw music generation.

After executing the code and getting the prompt, this is the song that was generated:

The result was educational let's say. Even if I mute everything except drums and bass, trying to chop it up to match my video cuts would be basically impossible without ruining the song. I might need to separate even the drums into individual stems to have any hope of making this work.

I'm starting to think I might be fighting music theory itself here. Good music follows patterns that evolved over centuries for a reason. When I try to force those patterns to match random video cuts, I'm basically asking music to be something it fundamentally isn't.

But I want to see if there's a middle ground. Could I find the ideal BPM for the video's rhythm - the tempo where drum hits would match most of the cuts - and then use swing and subtle timing adjustments to nail the remaining ones? If a drum hit needs to land 50 milliseconds off the beat to sync perfectly with a cut, maybe that's acceptable. Could sound amazing, could sound terrible. Only one way to find out.

Next Week

I want to test whether I can actually restructure existing drum patterns to match video timing. I'll take some drum stems, give them to my analysis code, and see if the restructured version still sounds decent. If it doesn't work with stems, this whole approach is probably dead in the water.

If anyone reading this has tried something similar, or has thoughts on music generation for video, I'd love to hear about it. I have no clue if this direction could actually work, but that's half the fun.

Follow along as I either succeed wildly or fail spectacularly - probably the latter.

Subscribe to my Substack